So, #ShameOnMetaAI was trending on Twitter because MetaAI was used to generate jokes on Hindu gods including Lord Ram & Lord Vishnu.

Some tweets say it declined to crack jokes on Prophet Mohammed, but others have screenshots with jokes on him.

We tried this as well, and there’s a clear trend of inconsistency when it comes to the output from Meta AI when it comes to these jokes. Sometimes it cracks jokes, at others it doesn’t. If you ask it to crack disrespectful jokes, it doesn’t.

You need to keep a few things in mind:

1. AI outputs are trying to please you. You want a joke & ask for it, it will try its best to create one. It’s meant to give you an output you want, not an output that is offensive. It assumes if you’re asking for a joke, you want one (and won’t offend because it’s a joke).

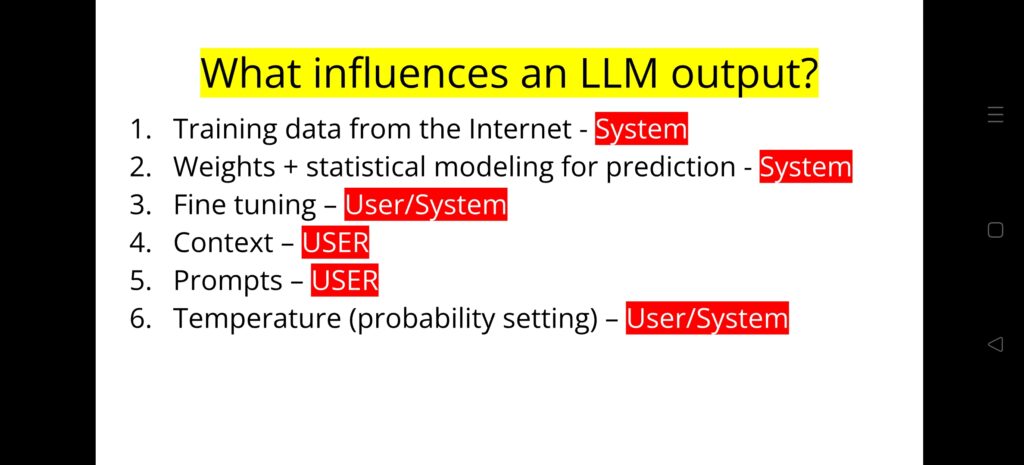

2. Most importantly, an output is not just based only on the system: the AI Model or its training. It’s impacted by the user as well. Someone can provide problematic context and it can lead to a problematic output. These things are still being fixed but *no guarantees*.

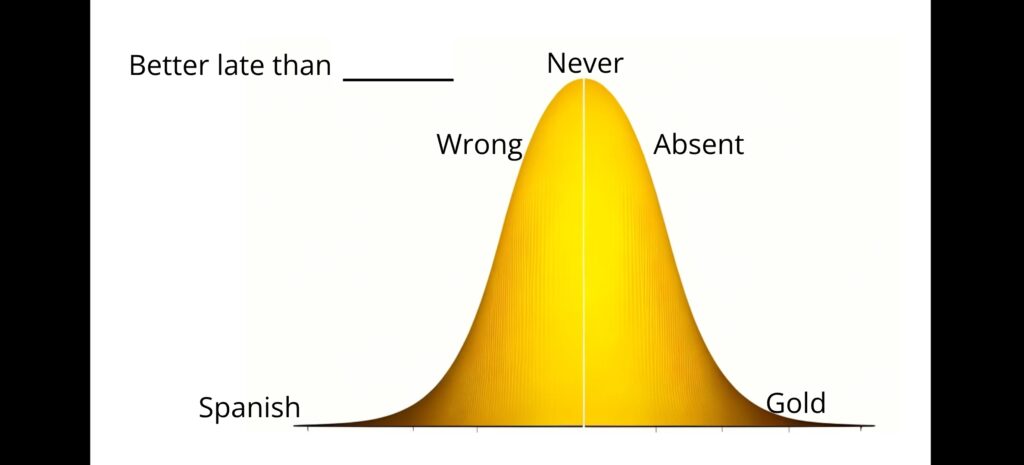

3. The output is going to be inconsistent and factually wrong because it doesn’t pull a joke or any info from a database. It tries to create/generate a probabilistic output, afresh. You probably won’t get the same joke twice, because it’s generating on the fly.

4. It might even also not be able to distinguish btw Ram & Lord Ram at times, or Mohammad and Prophet Mohammad. These things are tricky for a chatbot because it’s trying to estimate a desired outcome… An outcome that you desire. At times it will be more creative, less accurate. All probability based. It’s also difficult for an AI co to instruct what a chatbot should & shouldn’t say or do. It doesn’t have social/religious/local context. Sometimes that instruction to not make jokes will work.

5. Sometimes it can be overridden. People have jailbroken restrictions using prompts. Chatbots never be 100% accurate or reliable.

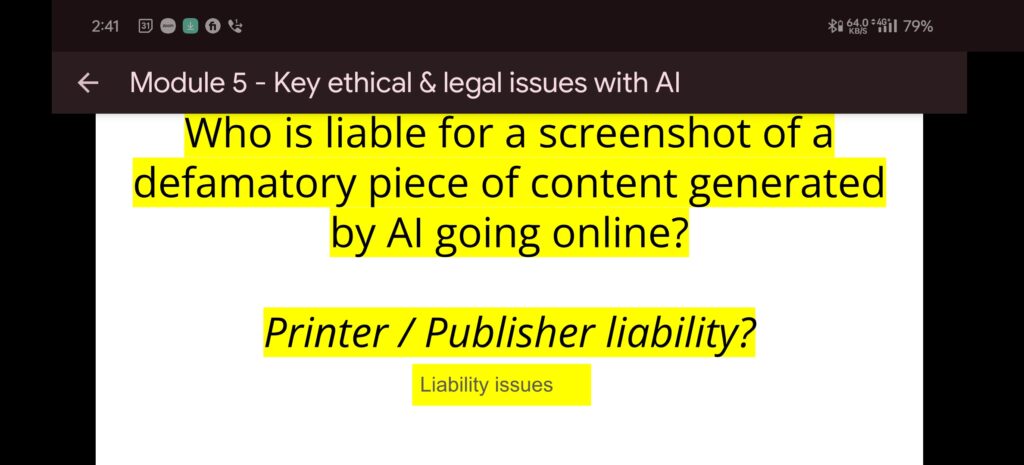

6. So, whose responsibility is this? Who’s liability is it for an output? Is it the developer? Is it the user? We don’t know because we don’t know who’s responsible? The model, the user, the person publishing the screenshot.

We will have regulation eventually and these issues will impact AI and its deployment in India.

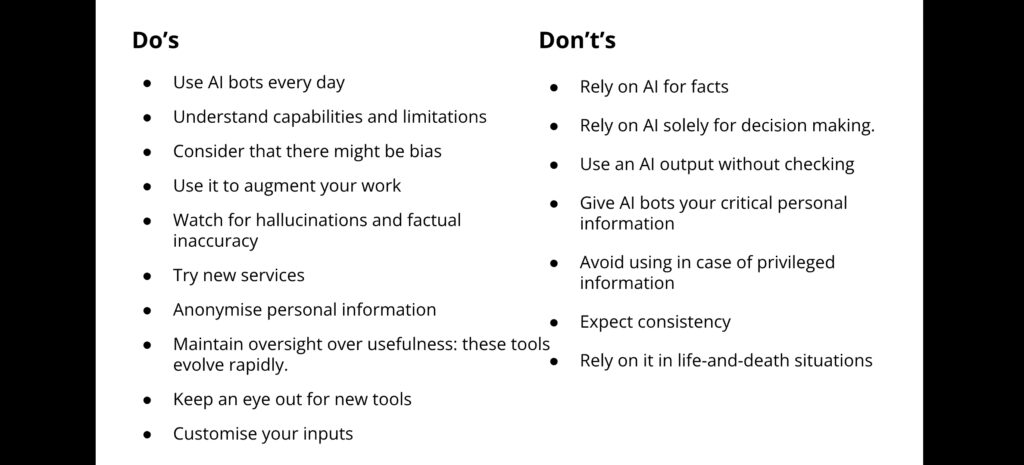

Some slides explaining these points from my AI workshop: